RAND Europe featuring the Digital Trust Label - Exploring ways to regulate and build trust in AI

Jessica Espinosa May 2022

On the 27th of April, RAND Europe carried out a virtual roundtable to present and discuss the findings of their research “Labelling initiatives, codes of conduct and other self-regulatory mechanisms for artificial intelligence applications: From principles to practice and considerations for the future”. The event brought together several stakeholders, including experts who were interviewed for the study, policymakers, researchers, and industry representatives. The Digital Trust Label was featured in this research as an example of an operational labelling initiative to denote the trustworthiness of a digital service.

During the introductory remarks at the RAND event, Isabel Flanagan, one of the main researchers, highlighted that building trust in AI products and services is vital to building support and motivating wide spread uptake of these technologies. Also, she mentioned that the increasing need to build trustworthy AI is also being recognized under policy frameworks within the European Union (EU), such as the 2019 Trustworthy AI Whitepaper and the 2021 AI Act.

In this context, commissioned by Microsoft, RAND Europe carried out a research project aiming at exploring what are the relevant examples of self-regulatory policy mechanisms for low-risk AI technologies, as well as the opportunities and challenges related to them. As part of this research, the RAND team reached out to the Swiss Digital Initiative to get a deeper insight into our Digital Trust Label, and better understand how this contributes to the trustworthiness of a digital service.

In total, the RAND team identified 36 different initiatives. However, these initiatives are widely diverse, some are still in the early stages of conceptualization, while others are already being implemented. The examples of self-regulatory mechanisms come from a wide range of countries across the globe, some with the intent of local implementation, and others aiming for a more global reach. Also, some initiatives are designed and targeted for particular sectors such as healthcare and manufacturing, or just championed certain causes, such as gender equality or environmentalism.

Moreover, the results of the research show that most self-regulatory mechanisms fall within two main categories: labels and certifications, like the Digital Trust Label, and codes of conduct. On the one hand, certifications and labels are mechanisms that define certain standards for AI algorithms and are assessed against a set of criteria, generally using an audit. These resemble the energy or food labels, as they are meant to communicate to consumers that the AI product they are using is reliable and safe. On the other hand, codes of conduct do not involve an assessment against measurable criteria and instead are composed of a set of principles and standards that an organisation should uphold when developing AI applications. Nevertheless, the two categories share some common aims, such as increasing the number of users, elevating trust by signalling reliability and quality, promoting transparency and comparability of AI applications, and helping organisations understand emerging standards and good practices in the field.

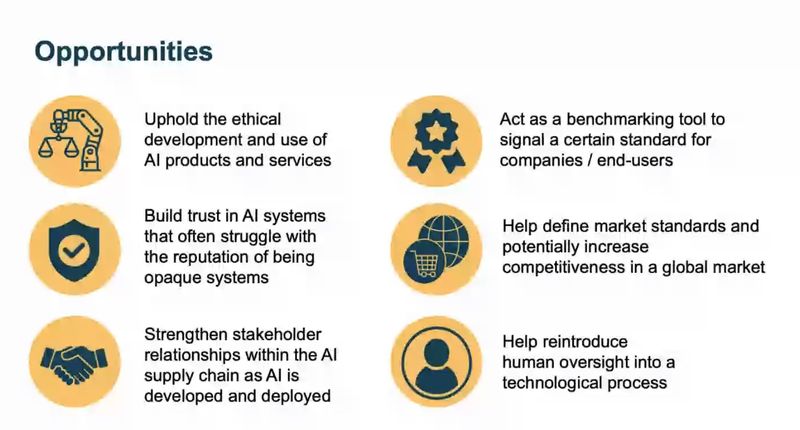

As part of the research results, The RAND team identified several opportunities within the design, development and implementation of self-regulating mechanisms:

Source: “Labelling initiatives, codes of conduct and other self-regulatory mechanisms for artificial intelligence applications From principles to practice and considerations for the future” by RAND Europe, 2022 [https://www.rand.org/pubs/research_reports/RRA1773-1.html]

However, as with any other technology, the opportunities always come along with new challenges. Through the research, RAND team identified and concluded that the main challenges for the regulation of AI are:

- The variety and complexity of AI systems can lead to challenges in developing assessment criteria.

- The development of ethical values depends on the field of application as well as the cultural context in which the AI system operates, which raises challenges associated with predefining a set of criteria for all scenarios and use cases of AI applications.

- The evolving nature of AI technology raises challenges to keeping up with the updates of self-regulating processes. This is why the SDI is constantly evaluating and redeveloping the Digital Trust Label, based on recommendations made by experts.

- The development of self-regulating tools must consider the multiple stakeholders’ needs to ensure widespread buy-in and adoption. The Digital Trust Label was developed under a multi-stakeholder approach involving consumers, representatives from the private and public sector as well as civil society, to guarantee that all views and perspectives were included.

- There is a need for market incentives, given that the cost and burden for smaller companies might result in a lack of adoption of the self-regulating mechanisms.

- There are trade-offs between consumers and driving innovation and competition. If a certification or label is hard to obtain, it can make it difficult for new companies to gain access to the market.

- There might be regulatory confusion given that there are a lot of initiatives and a fragmented ecosystem in the self-regulatory mechanisms, as each has varying levels of complexity, development, and criteria. This potentially could lead to a decrease in trust and challenges around comparing different AI systems on the market.

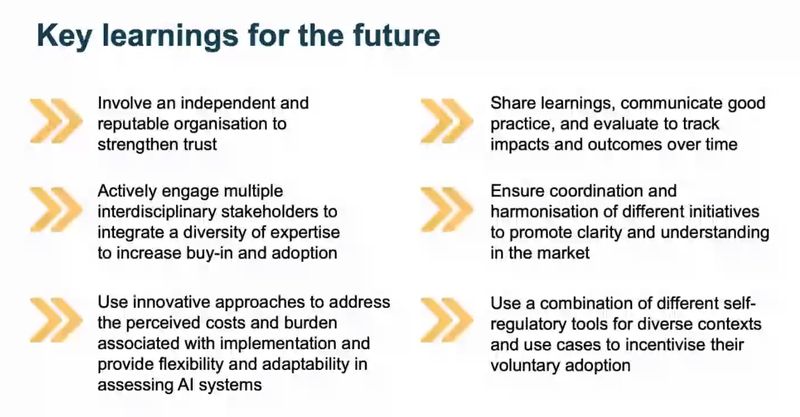

Finally, the presentation finished with some of the key learnings that the RAND team considers should be key in the future when designing, developing, or incentivising self-regulatory mechanisms for AI systems.

Source: “Labelling initiatives, codes of conduct and other self-regulatory mechanisms for artificial intelligence applications From principles to practice and considerations for the future” by RAND Europe, 2022 [https://www.rand.org/pubs/research_reports/RRA1773-1.html]

After the presentation of the results, the event followed with a series of roundtables and discussions on the future of AI regulations with smaller groups. Here are some of our main takeaways:

- AI is embedded in many types of products and services, in most cases, the product is also connected to a service, therefore it becomes a complex equation to handle. When developing self-regulatory tools it is very important to be clear about the scope. Creating a label, code of conduct or certification that can do everything is not possible, these should be clear about what is going to be self-regulated, whether is a specific product, a set of services, or one featured in a specific set of services.

- There can be a selection bias issue when AI audits are voluntary. Usually, the companies that will volunteer to do them are mostly the ones who are forward thinking, concerned about the challenges in AI algorithms, or who have staff really interested in particular AI issues.

- Customer demand is the driver or biggest incentive for self-regulatory mechanisms. They become successful if the customer is asking for them. Therefore, it is important to develop a tool that is understandable for the consumer. However, there should be a balance between something technically meaningful and easy to understand for everyone.

- For future efforts on AI self-regulatory mechanisms, it is important to specify whether it is done at the level of an AI tool that is used by many customers or at the level of each customer’s implementation. Under this scenario, the audit can guarantee that the tool is reliable and safe, but the organisation using it has to make sure that the tool is being used or implemented correctly. This means that the tool is already proven to be trustworthy but how it is going to be maintained and used is the responsibility of the organisation. In this sense, the scope of the mechanisms is key. Implementing certifications or labels for products but also for organisations as a whole, there can really be a chain of trust among different stakeholders.

- And finally, there needs to be something beyond the audit that allows space for constant improvements and innovation of certification, labels, or codes of conduct.

Bringing trust and ethics back into tech

Through our experience and learnings in developing the Digital Trust Label, we can support many of the identified challenges. The Digital Trust Label is one of the first of its kind. By using a clear, visual, plain, and non-technical language, the Digital Trust Label denotes the trustworthiness of digital services in a way that everyone can understand. By combining the dimensions of security, data protection, reliability and fair user interaction, the DTL takes a holistic approach when it comes to addressing the complex question of digital trust. Recognising the fast pace of technological innovation and evolution, the DTL was developed to constantly be adapted and confront the challenges of the digital transformations.